Maybe you’ve recently logged into your survey panel account, only to see one of these dreaded messages:

Your heart sinks and confusion and maybe even anger sets in. Why did the survey website freeze your account? What could you have done wrong?

Why this can happen

Market researchers who run online survey panels need to ensure data quality. After all, the purpose of market research is to shape the future of products and services, something that affects everybody. To ensure that their panel of respondents (survey takers like you!) are not trying to “cheat” the system, they employ a variety of methods to keep undesirable respondents out of their samples. When these respondents are caught, their accounts are often “frozen”, or suspended.

Types of undesirable respondents:

- Speeders are, as the name implies, people who speed through entire surveys, and/or people who speed through grid questions specifically. Speeders are typically caught using timestamps, and often tend to straightline. They are one of the easier types of undesirable respondents to weed out of a panel.

- Fraudulent respondents are those who misrepresent themselves by using fake names and/or addresses to create a fake identity. Market research firms frequently use source testing to determine whether or not a respondent is fraudulent. They may also cross-reference the registration data they receive with existing databases of blacklisted respondents.

- Inattentive respondents are those who, intentionally or not, do not put much thought into the way they answer a survey.

Why your account might be deactivated:

If your account gets frozen or deactivated, you have probably been classified as one of the types of undesirable respondents above. Specifically, you have probably flagged the system with one the following:

You failed the red herring questions

The purpose of red-herrings questions is to weed out inattentive respondents and speeders.

A red herring is something that detracts from the issue at hand. In online surveys, it refers to random questions that seem to have nothing to do with the survey. For instance, in the middle of a survey you might encounter a question like, “Is a cactus an animal or plant type?” These questions can also be less obvious, such when fictitious brands are added to survey question selections.

Some members on our forum have shared their experiences with these funny trap questions they’ve encountered while taking surveys.

Your timestamps look funny

The purpose of timestamps is to weed out speeders.

If a survey should take 15 minutes, and your completion time is 5 minutes, you may be labelled as a speeder. The estimated length of time for survey completion is initially estimated by researchers, and is primarily based on the style of questions that are asked (grid/matrix, drop-down boxes, etc.) When other respondents have completed the questionnaire, the estimated completion time for the survey is then adjusted to the actual average completion time.

Note for cheaters: timestamps can be at multiple points throughout a survey, so rushing through a survey, then waiting a few minutes before hitting the “submit” button, is not going to work!

You’re “straightlining”

If you’re providing very little variation in your responses, you could be “straightlining”. This is especially evident in grid/matrix type surveys. How do researchers know you’re doing this? Your data is cross-referenced with other respondents’, who have taken the same survey.

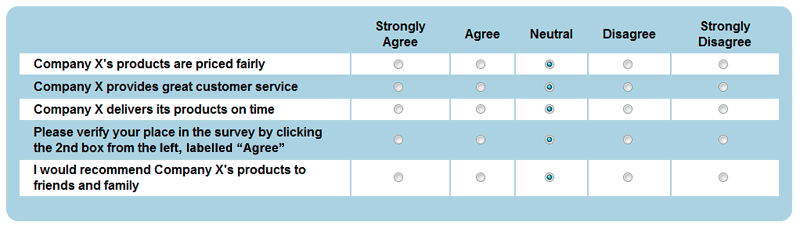

You didn’t follow instructions properly

Following instructions might sound like an obvious thing to do while taking a survey, but many respondents fail this basic test. Sometimes instructions can be something like “write the word “cow” in the blank box”, or grid-style, such as shown in the image below. At other times, market researchers can be sneaky, and will reverse the order of questions so that things cannot be consistently rated both high and low (this relates to logic traps below). Not following instructions properly then, can flag your account for inconsistent responses.

An example of failing question-based verification:

By including a “checkpoint” like this in the middle of a table-format question, researchers are able to verify respondents’ attention and remove straightliners when necessary.

You failed the logic traps

If you’re a respondent who provides answers that are logically inconsistent with each other, your account may be flagged. For instance, if at the beginning of a survey you agree with the statement that “I purchase tacos at least once per month”, but later in the survey, answer “no” to the statement, “I purchase tacos at least ten times per year”, then you have failed the logic trap within the survey.

Inconsistency in responses

Questions that may seem redundant are often actually used to verify that a respondent is not fraudulent. Something like asking for your zip code or city of residence both at the beginning and end of a survey can seem annoying, but it does serve a purpose: if the your answers do not match, you could be classified as a fraudulent respondent.

You failed the source testing

When registering with a survey panel, some checks are done so that fraudsters don’t dupe the system and that mechanical bots cannot successfully register. These checks include:

- E-mail/IP validation – ensuring a respondent’s e-mail address is unique

- Postal address verification – verifying that the postal address provided actually exists

- Proxy detection – validating the registrant’s true IP address

- Other checks that vary from panel to panel

Another possible check is verifying that other members of the household are not also members of the panel (only applies to panels that forbid this, and is stated in a survey panel’s terms of service).

You failed to comply with other panel rules

Survey panels often have their own unique set of rules their members must abide by. These are listed in the panels Terms of Service and agreed to by you when you first become a member. At the very least, when registering with a panel, scan the terms to see if there is anything there that could be a problem for you. If the terms state that your account can be deleted for a reason you don’t agree with, consider joining a different panel.

Respondent monitoring

A panel of respondents is something that has to be regularly maintained by market researchers, not only to ensure that the quality of data isn’t compromised by fraudsters, speeders, etc., but to also make sure that the panel they are building is representative of the general population. If the number of surveys you receive drops, it could mean that there are enough people who match your demographic on the panel.

Respondents can also be monitored for completing the same online survey multiple times either within a single panel or across multiple panels. It’s in your best interest then, not to intentionally take the same survey from different survey panels if you’ve already completed it once.

Conclusion

If you’re an honest survey taker, the chances of having your account suspended or cancelled are very low. However, if you decide to intentionally answer surveys falsely, know that you are putting yourself at risk of having your account suspended. Worse, if you are placed on a “blacklist”, you might not be sent surveys from other market research panels either.

Market research affects all of us, and providing companies with false data can not only adversely affect the future marketplace, but market research as a whole. If you enjoy taking surveys and would like to continue voicing your opinions for years to come, don’t cheat the system!

Sources:

1. The LoveStats Blog – Survey Design Tip #3: Do You Encourage Straightlining? #MRX

2. Mktg, Inc. – “BAD” RESPONDENTS AND THE PANEL QUALITY INDEX

3. infosurv – By the Numbers: Protecting online survey data integrity